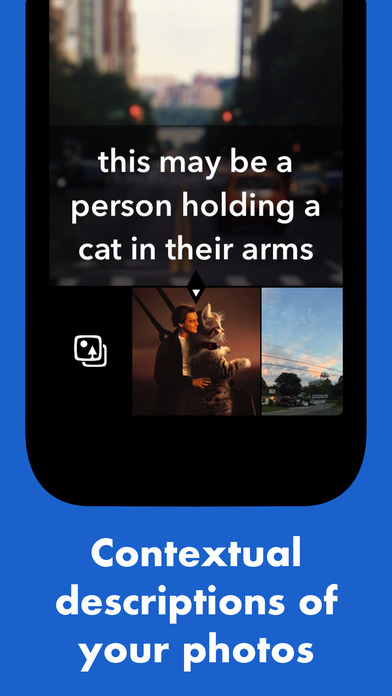

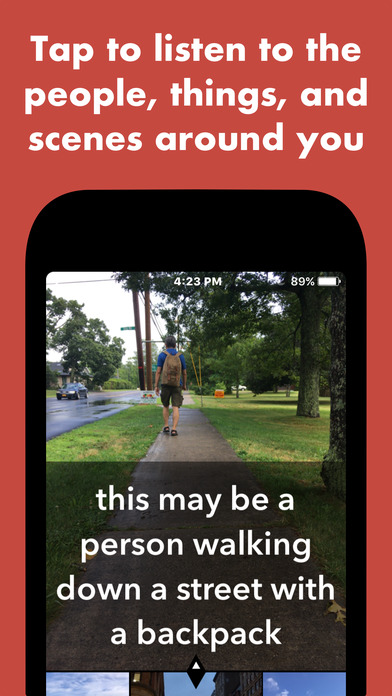

Perigo Sight is an artificially intelligent tool designed to help the blind and visually impaired understand more about their surroundings as well as their photos. With a single tap, Perigo will quickly speak out to you a description of what it sees using natural language. Perigo can understand thousands of common objects and visual scenes that you might encounter in your every day life.

Easy to use — On the upper portion of the screen, you can tap once to describe your surroundings. On the lower section, you can swipe left and right with one finger to explore and describe your library of photos.

Contextual Descriptions — Perigo is able to recognize the objects around you and in your photos and understand the relationships between them.

Blazing Fast — Perigos snappy AI can understand your photos in just one or two seconds.

No Internet Needed — Youll never have to worry about losing internet access because Perigo runs entirely on your phone.

Totally Private — None of the photos you scan with Perigo will ever leave your phone. Feel free to tap away in complete privacy.

VoiceOver Compatible — Whether or not you have VoiceOver enabled, Perigo will audibly speak its descriptions.

Works in the Dark — When it needs to, Perigo will automatically use your iPhones flash to illuminate dim environments.

Completely Free — Perigo and all its features are and always will be of no cost to you.

I hope Perigo empowers you to explore your photos and the world around you. As a disclaimer, Perigo is not meant to be used as a medical utility, and will occasionally give erroneous descriptions of what it sees. Perigo will become more accurate in later releases, but for now it is suggested that you avoid using it on sparsely populated images such as landscapes, close-up portraits, and otherwise ambiguous, blurry, or dark scenes.

Perigo implements a mobile variation of the deep convolutional neural model described by Vinyals et al., 2016.